Let me start with a few facts. Our ocean is vast. It covers more than 2/3 of the Earth’s surface. Our ocean is alive. The life in it is found at the surface, as well as in the most extreme environments in the deepest submarine trenches. Through photosynthesis, phytoplankton at the surface of the ocean produce half of the oxygen on Earth, the same one that we breathe. The ocean regulates Earth’s climate, and more than 90% of the excess heat coming from human-induced global warming has been absorbed by it. No matter where we live, the ocean surely affects our life. Yet, 95% of the ocean remains mysterious to us. The main reason for this is that oceanography as a modern science is relatively young. It was only about 150 years ago that the great Challenger expedition cruises were launched to collect the first data about the ocean. With it, the foundation of the theoretical studies of the ocean on a wider scale began. Through time, numerous research vessels, drifters and buoys have been constructed and deployed to collect even more data from the ocean. Oceanographers examined the data, and our knowledge of its states and how it moves started to expand. We started to be more curious about marine biology, chemical components, and the physical properties of our ocean. We wanted to know more about ocean life, its currents and winds. But each deployment was rather expensive, and the result of it was that all collected data were sporadic both in time and space, and it was just not sufficient to characterize all the spatially diverse parts of the ocean. By the end of 1970s, technological growth enabled remote observations of the ocean’s surface with the launch of the first satellites. With the first observations taken from Earth’s orbit, humankind was finally able to see ocean currents on a wider scale.

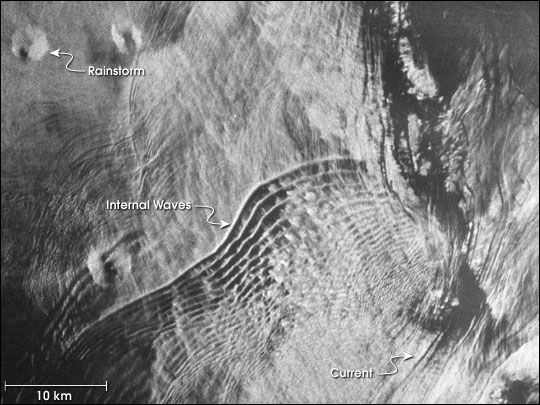

Gulf of Mexico area, North East of the Yucatan Peninsula. The image was taken by the imaging radar that was built into the Seasat satellite. The satellite was launched on June 26, 1978 (Image courtesy of NASA Jet Propulsion Laboratory).

Since then, rapid development of ocean observation technologies have resulted in explosive growth of various kind of data, and this data is capturing the ocean’s essence. Oceanographers are able to explain newly observed physical phenomena, such as internal waves, energy distribution, thermohaline circulation, and more. We have learned so much more about the properties of our seas, and now we know how life is possible in the deepest parts, far away from the euphotic zones. Each new data that we collect contain new information about the ocean, and we as a scientists need to find ways to extract this information. Today this is not an easy task because the data just keep pilling.

Simultaneously, the modelling community is also experiencing its own rapid development, which results in even more data produced by the numerical short-term and climate prediction systems. These types of data are important to us because they are giving us a glance into what the future might look like. Today we have tons of data in oceanography that is growing at an exponential rate, and this is somewhat new. Getting all these data together is a challenge. Most of the oceanographic data analysis techniques that we are familiar with are not designed to handle large amounts of data series. Simply put, the amount of data is just too big for any traditional computing system, let alone a single mind to handle it. And if you are dealing with spatially and temporally varying data, such as is the case of real ocean behavior, the analysis of these massive data, i.e. big data, is not straightforward. Whether we want it or not, we are now facing necessary changes in traditional oceanography, and we need to find a way to balance the knowledge that we already have with all of the new data that keeps coming. The way I see it, a new era in oceanography is beginning, the one that includes the development of novel techniques to deal with big data.

Image created by Natalija Dunić

Image created by Natalija Dunić

As a young researcher, I started to work with big data. I was using old analysis techniques which resulted in data-intensive computing. Quite soon I came across multiple obstacles. My desktop computer did not have enough power to handle terabytes and terabytes of data, and I had to quickly learn smart programming techniques just so my computer wouldn’t crash. Sometimes the metadata were not uniform, and the extraction of data lasted for several weeks. This gave me nightmares. The way that I was performing data mining felt like I was digging a real mine with a spoon. At the end it worked; I have two scientific papers that proves it. However, the consumption of my energy and time was substantial, and those matter a lot, not only for me but also for a number of my colleagues. After four years, I wished there was a better and more efficient way to do data mining within big data sets. And I think there could be. The novelties available in the technological revolution today, especially the possibilities that lie within Artificial Intelligence (AI), are opening a whole range of possibilities in oceanography. The traditional big data mining that I was conducting (and that the majority of the ocean scientists are still doing) was mostly based on the division of data and on scale transformations, and this takes time. To effectively improve oceanographic big data mining, the development of automatic data mining methods based on AI solutions are urgently needed. Machine learning techniques have already been proven to be quite efficient in the business sector, for past data analysis and for future prediction models. And I don’t see any reason why those techniques wouldn’t work in oceanographical sciences. But we need to keep in mind that, in order to successfully use novel analysis methods, data providers need to establish agreeable ways for data sharing, as well as on their analysis and expression. Unfortunately, current research institutions represent the core of data possession, but they do not exploit them to their fully potential. I see this as a huge obstacle.

In the era of rapidly evolving global warming threats that we are living in today, an accurate and precise prediction of changes in the ocean’s and in the earth’s climate is a must for making smart and scientifically based decisions. The same decisions that will help all of us to mitigate today and in the future. And we are all aware of the urgency of this matter. But to get there, we need an accurate and polished forecasting of ocean-atmosphere system. This means that instead of numerous parametrizations that present only an approximation of certain physical processes, we need to make an explicit description of them. And detailed analysis of the data that we already have could make this happen. If we could exploit the data to their full capacity, this would create a breakthrough in marine sciences, such as climate predictions, water quality surveys and of course, in scientific research. We could even create a virtual and interactive ocean. Further, these data could be combined with societal and economic data to get an even wider picture of the real world we are living in today.

There are not a lot of scientists in marine research worldwide who would be up to this task. But the data scientist doesn’t have to be an oceanographer at all, and this is the beaty of it. Instead of the quasi biased and limited analysis that we are performing today, one can also exploit the data in an objective point of view, using AI techniques. At the end of the day, why not share data on our environment with everybody? You never know when someone will make the best of them, for a better tomorrow for all of us…